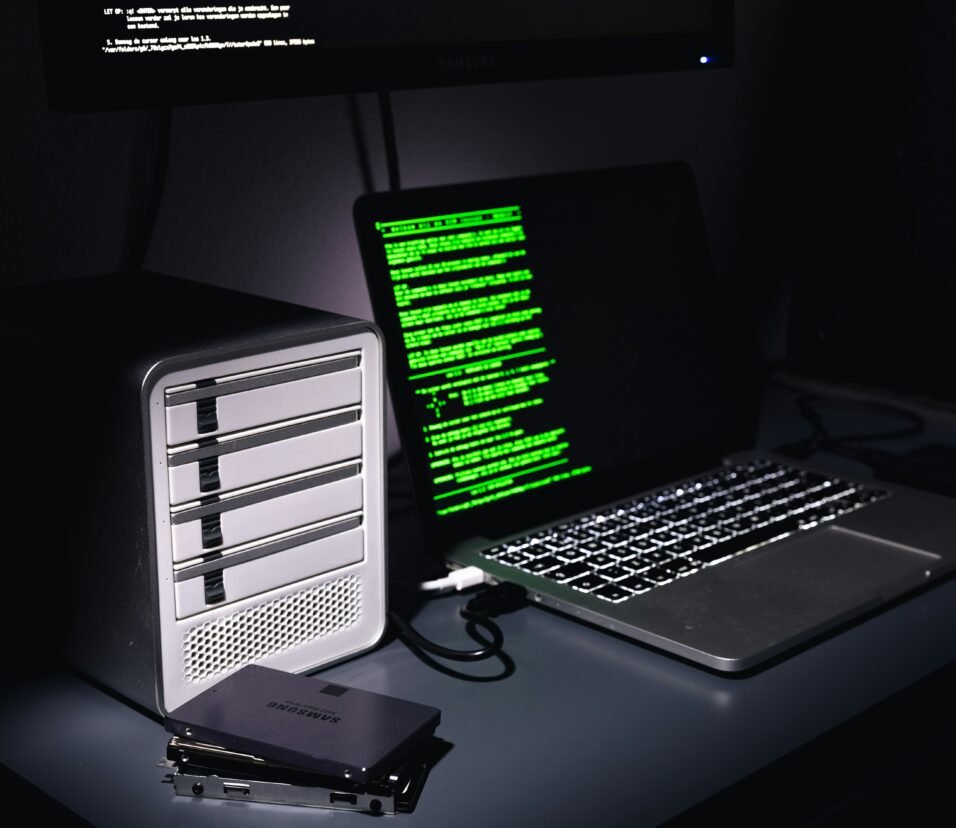

Specialized Computing in 2025: Tailoring Hardware & Architectures for an AI-Driven World

From GPUs to TPUs, quantum chips to neuromorphic processors—2025 is no longer about “faster” computing, but smarter, more task-specific computing. Welcome to the era of Specialized Computing, where every workload gets its own custom-built engine.

🔍 What Is Specialized Computing?

Specialized computing refers to the design and use of hardware and software optimized for a specific type of computation or task, rather than general-purpose computing.

It includes:

- Accelerators like GPUs, TPUs, FPGAs

- Custom silicon (e.g. Apple’s Neural Engine, Tesla Dojo)

- Edge AI chips (like Google’s Coral or NVIDIA Jetson)

- Quantum processors

- Neuromorphic chips (brain-inspired architectures)

🧩 Why Specialized Computing Matters (Now More Than Ever)

1. ⚡ The AI Boom

Massive LLMs (like GPT-5), computer vision models, and reinforcement learning agents all require parallel, high-throughput computation—best handled by GPUs, TPUs, or ASICs.

2. 🛰️ Edge AI & IoT

Devices like drones, smart sensors, and wearables need to run ML models locally with tight power and latency constraints—general CPUs won’t cut it.

3. 💸 Cloud Economics

Purpose-built silicon often offers 10x performance at a fraction of the energy and cost of traditional CPUs.

4. 🧬 Scientific Computing

Drug discovery, genomics, and climate simulations rely on domain-specific architectures to crunch exabytes of data.

🛠️ Types of Specialized Computing Hardware

| Type | Purpose | Examples |

|---|---|---|

| GPU | Parallel tasks (e.g., ML, rendering) | NVIDIA A100, AMD Instinct |

| TPU (Tensor Processing Unit) | Deep learning training/inference | Google TPU v5e |

| FPGA (Field-Programmable Gate Array) | Reconfigurable logic for custom tasks | Intel Stratix, Xilinx |

| ASIC (Application-Specific IC) | Fixed-function accelerators | Tesla Dojo, Apple Neural Engine |

| NPU (Neural Processing Unit) | AI in mobile/edge devices | Huawei Ascend, Qualcomm Hexagon |

| Quantum Processors | Quantum algorithms & cryptography | IBM Q, Google Sycamore |

| Neuromorphic Chips | Brain-like spiking neural nets | Intel Loihi, IBM TrueNorth |

🧠 Specialized Chips Powering AI in 2025

| Task | Chip |

|---|---|

| Text generation (LLMs) | NVIDIA H100, Google TPU v5p |

| Edge inference | Google Coral, Sima.ai, Hailo-8 |

| Training recommendation engines | Meta’s MTIA, Amazon Trainium |

| Video analytics | Movidius VPU, Jetson Orin |

| Genomics & bioinformatics | Graphcore IPU, Cerebras CS-3 |

| Multimodal AI | GroqChip, SambaNova RDU |

🧬 Real-World Applications

🚗 Autonomous Vehicles

Tesla’s Dojo supercomputer processes real-time driving data from millions of miles using custom AI chips designed to prioritize latency and thermal efficiency.

🧬 Healthcare

ML models analyzing genomic sequences now run on FPGAs and neuromorphic chips, dramatically reducing time-to-insight for drug discovery.

📱 Smartphones

Apple’s Neural Engine powers features like Face ID, object detection, and real-time translation—on-device, with no cloud latency.

🔐 Security & Energy Considerations

🔒 Security:

- Hardware-level isolation and encrypted memory regions

- Secure boot + ML-specific threat models (e.g., model inversion, data poisoning)

⚡ Energy:

- GPUs use hundreds of watts per chip—unsustainable for all use cases

- Edge AI chips can run inference at <1 watt

- Sustainable AI efforts now focus on green chip design and carbon-aware training schedules

📉 Challenges in Specialized Computing

| Challenge | Fix |

|---|---|

| 🔧 Complexity of programming | Use abstraction layers: ONNX, TensorRT, PyTorch XLA |

| 📈 Hardware availability | Cloud access to GPUs/TPUs via Colab, Paperspace, AWS |

| 🧬 Fragmented ecosystem | Open standards like MLIR, TVM, and WebNN gaining traction |

| ⚠️ Vendor lock-in | Use cross-platform frameworks like Triton, Hugging Face Optimum |

🔮 The Future: What’s Coming Next?

1. LLM-Specific Chips

Silicon optimized specifically for transformer models and attention mechanisms.

2. Quantum-AI Hybrids

Quantum pre-processing for massive input spaces, followed by classical deep learning inference.

3. AI Compilers

Auto-tune ML models to run best on target hardware (e.g., a compiler that rewrites your model for Jetson vs TPU).

4. Synthetic Brain Chips

Chips that don’t run code—but spike, fire, and “learn” like neurons (watch Intel’s Loihi 3 closely).

5. Edge + Cloud Handoff Architectures

Models split across layers: low-latency inference on-device, high-compute retraining in the cloud.

✅ TL;DR – Specialized Computing in 2025

| Topic | Summary |

|---|---|

| Definition | Hardware/software optimized for specific tasks (AI, graphics, bioinformatics) |

| Why It Matters | Speed, efficiency, cost savings, and unlocking use cases |

| Types | GPUs, TPUs, FPGAs, ASICs, Quantum, Neuromorphic |

| Top Trends | LLM chips, Edge AI, AI compilers, green silicon |

| Challenges | Complexity, hardware access, ecosystem fragmentation |

| Future | AI-powered chips, synthetic cognition, hybrid compute models |